Workflow

The agent can automate all operation that are usually used to collect data manually. These actions will bring Agent to target web pages from which you need to extract data.

Set Input

The agent can enter text, enable check buttons, select items in lists, etc on the web page. You can configure the agent to request parameters from user before execution or read them from some text files. It allows you to automate various search on the target web site. You can find more information about input data in input parameters chapter.

Follow Link

The agent can simulate click on the page. It is important to disable element highlight.

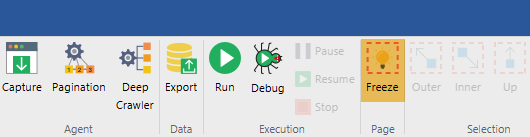

Disable Freeze in the application toolbar:

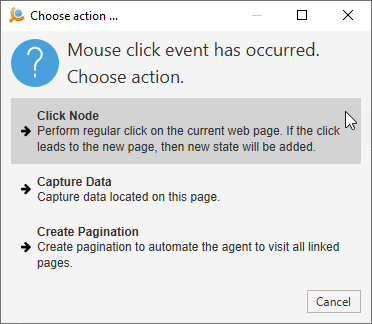

Otherwise clicking on the element will just highlight it on the page. Click on the link in the browser part agent. The selection dialog will appear:

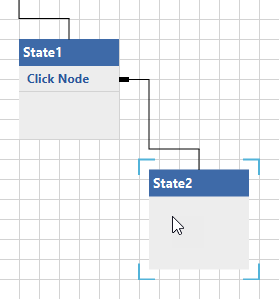

Select Click Node. The agent will simulate click on the page. If this causes a new page load, new state will be added to the agent. Also Click Node statement will be added to the source state.

Follow Multiple Links

The Agent can automatically visit multiple links in table, list, etc. It is usually used to automate scraping detail pages on some web site. The Agent uses list data pattern to collect group of links on the web page, then it sequentially loads each page and continues execution on new state/web page. Read more about how to configure the Agent to visit detail links in Deep Crawler chapter.

Pagination

Agent can handle pagination. It uses page patterns to locate next page elements and follow them. You can read more about pagination here.