Extract Data From Simple Web Site

Welcome to your first WebSundew tutorial. In this tutorial you will learn how to scrape basic web site and store extracted data into Excel file.

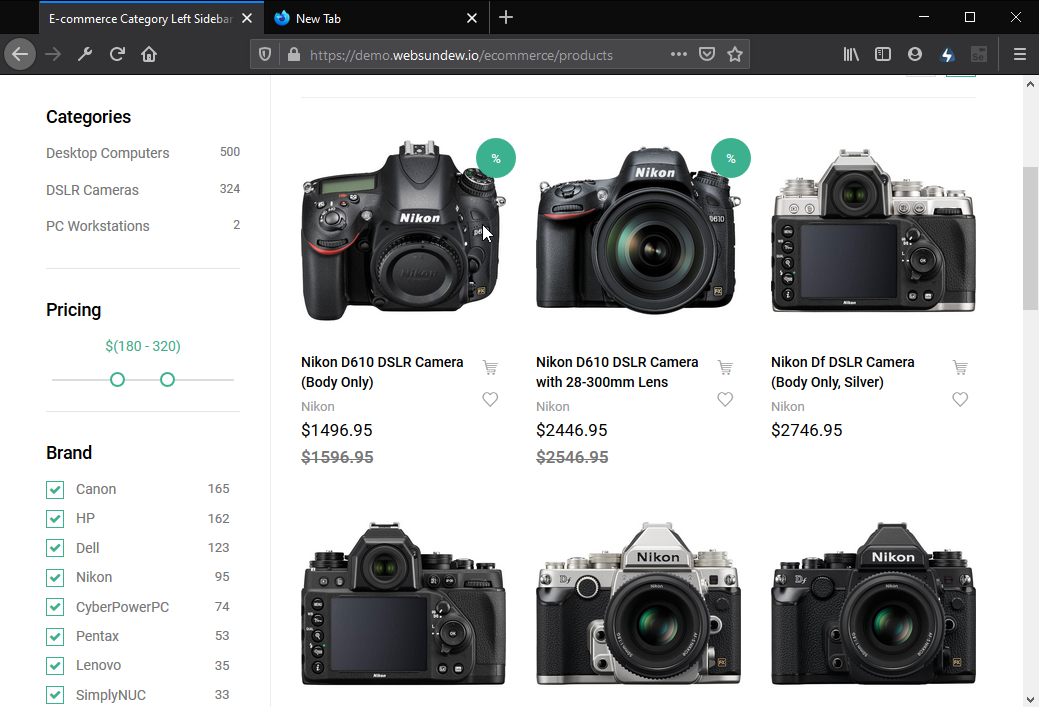

We will use one of our demo web sites (e-commerce demo) to create Agent. It will help you to easily repeat steps from this tutorial as web site will have same structure as we used in it.

The target web site is typical e-commerce web site with product list and paging. We will create agent to capture several product data (title, price, brand and product link) from the single web page and automate the agent to visit all linked pages.

If you did not download and install WebSundew you can find information here and follow instructions. If you downloaded and installed WebSundew start it by clicking on desktop icon or from OS menu.

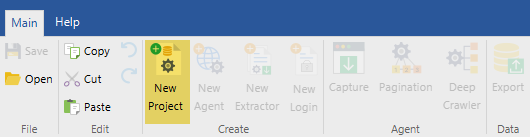

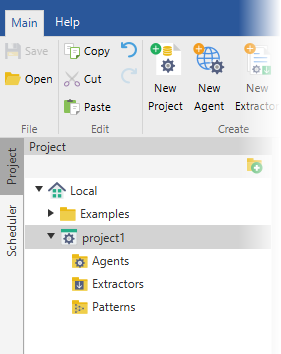

Step 1 - Create New Project

The first step is to create new project. The project is a place where all components of data extraction process are stored. Click New Project in the application toolbar.

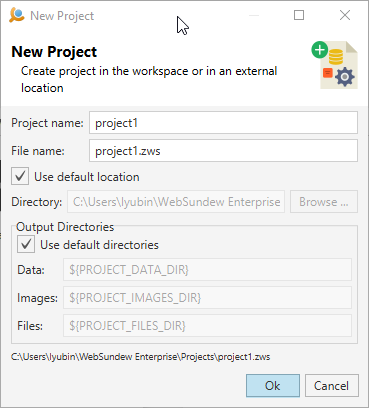

New project dialog will appear:

Enter project name. It is better to use name related to the target web site. It will be easy to find the project later. Click Ok The new project will be added into the project workspace.

Every time you run WebSundew you will be able to access it in the Project View

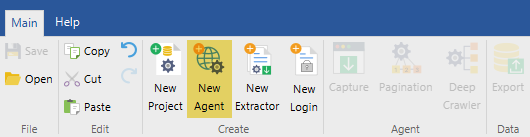

Step 2 - Create Agent

Agent is a one of the main concepts of WebSundew. It automates all activity the user performs to collect web data. For example navigate over web pages, click links, extract data and store it into data storage.

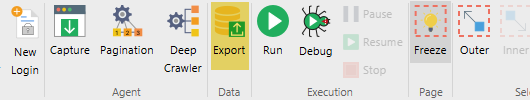

Click New Agent in the application toolbar.

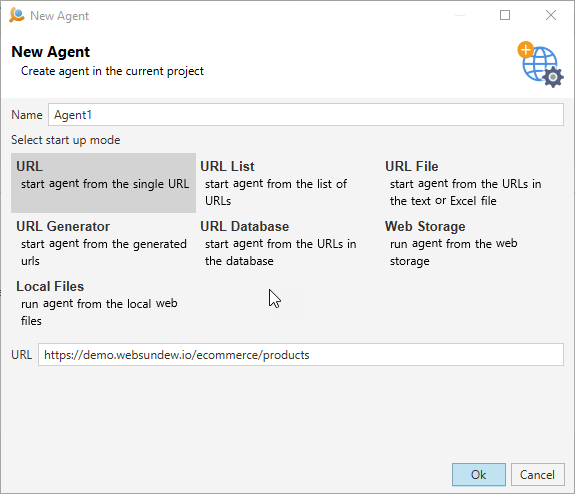

New agent dialog will appear:

Enter URL of the target web site. We will use our demo e-commerce web site - https://demo.websundew.io/ecommerce/products for this tutorial. Click Ok

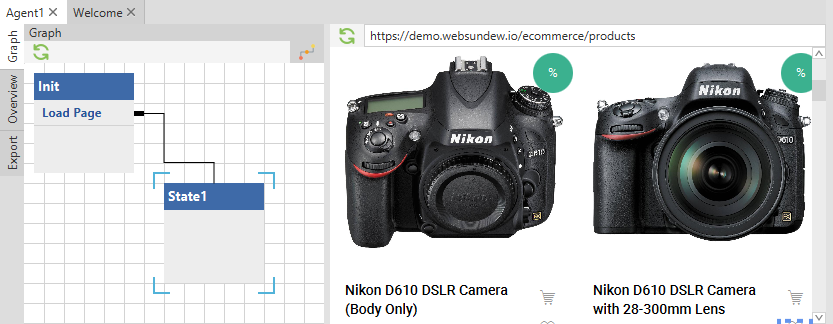

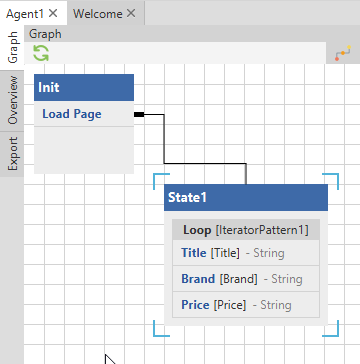

New Agent will be created and added to the current project. Also agent's editor will be opened. The created Agent will have two states: Init and State1.

Now we are ready to configure the Agent - capture some data and teach the Agent to visit all linked pages.

Step 3 - Capture Data

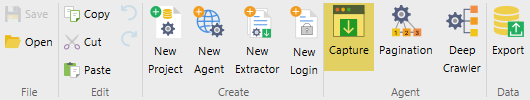

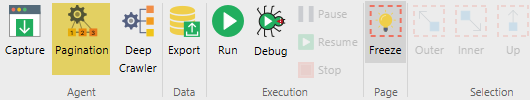

The target data is located on the web page related to the State1 so we do not need to add any actions to the agent's workflow. Click Capture in the application toolbar.

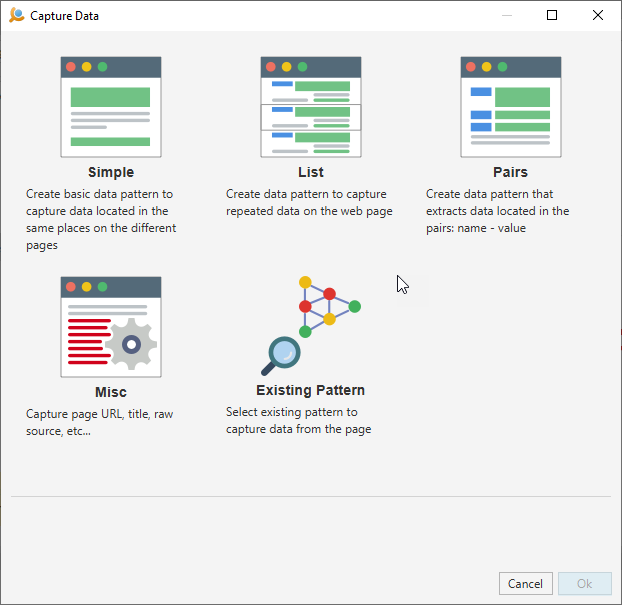

Capture selector dialog will appear:

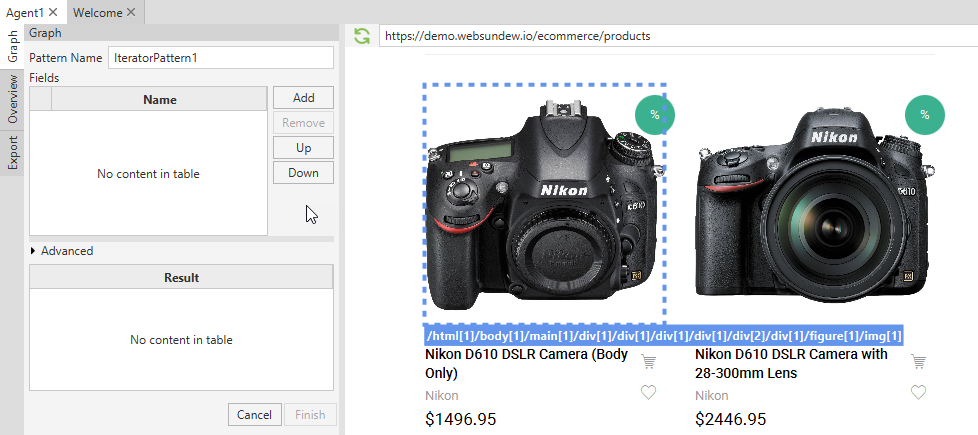

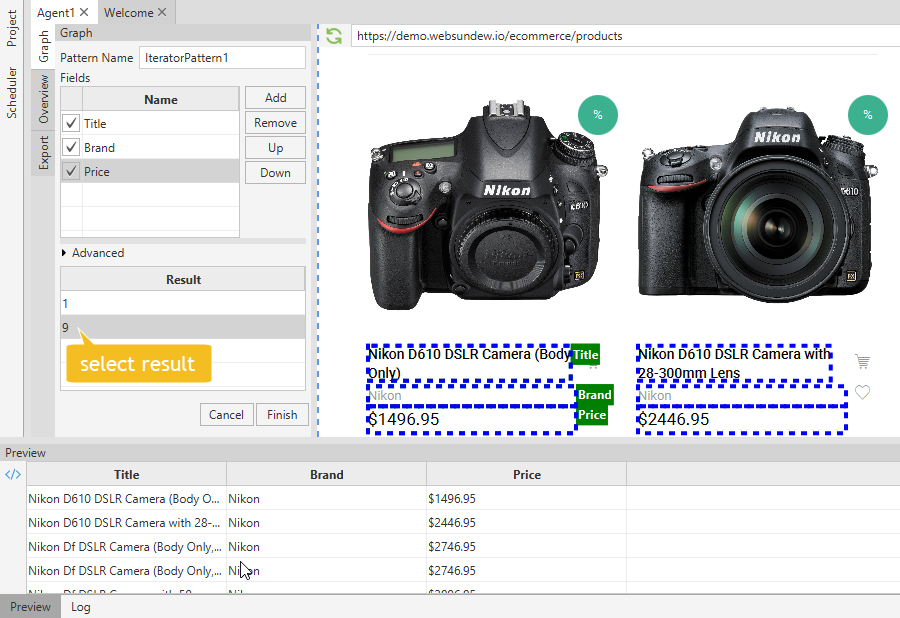

Select List as we need to capture multiple items on the web page. Click Ok. Data iterator pattern wizard will appear:

The Agent uses data pattern to capture data on the similar pages. So you need to teach the agent how to capture on one of the familiar pages and it will automatically extract data on the rest of them.

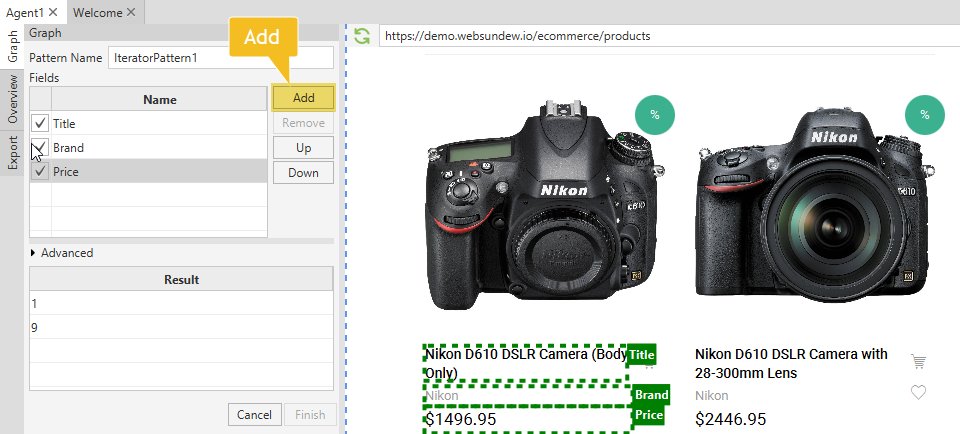

We will capture information of all cameras on the page, like: Product Title, Brand and Price. To create data pattern we need to select and add all required elements from the first item on the web page. Click on the product title then click Add in the pattern wizard. Repeat the same action for other fields.

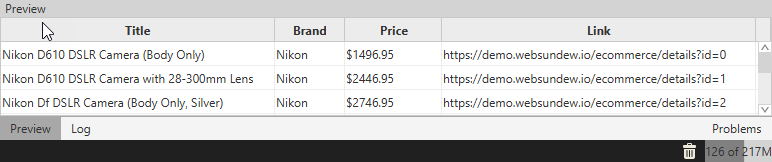

When you add the first field to the pattern, the pattern builder tries to locate similar items on the page. You need to choose appropriate data. Our web page contains 9 products. Click on the 9 in the result list. The result will be highlighted on the web page and will be available in the preview view.

Click Finish to complete wizard. New data iterator pattern will be added to the project and and capture block will be added into the agent's graph.

Step 4 - Refine Captured Data

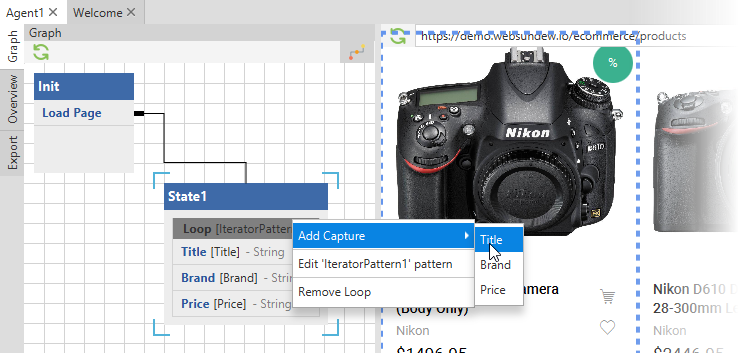

Now the Agent is able to capture the following fields: Product Title, Brand and Price. You can notice that product title is actually a link and it contains URL to the target web page. We can extract this URL as well. You need to add another field first. Right click on the Loop statement and select Add Caputure / Title:

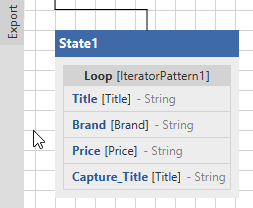

The new capture Capture_Title will be added.

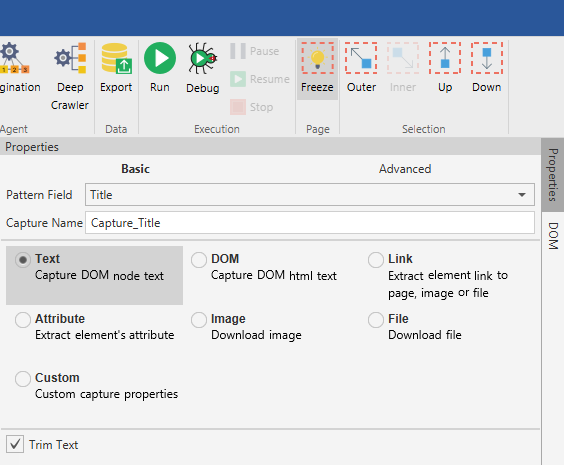

Click on new created capture and active Properties view in the right view bar.

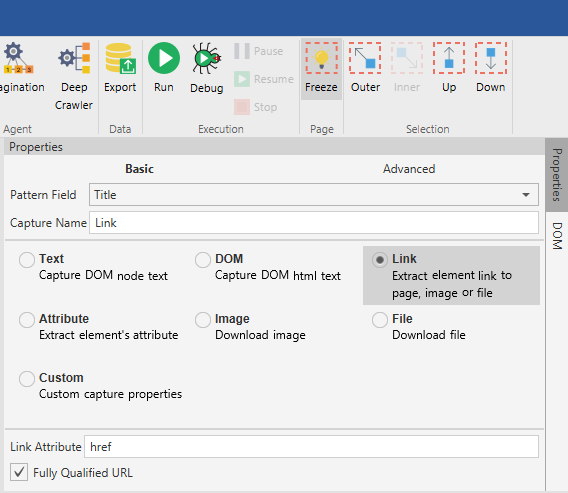

Change type of the capture from Text to Link. Also you can change name of the capture to more appropriate, like Link or Url.

Check captured data in the Preview view.

Step 5 - Pagination

To collected all data the Agent should visit all pages.

Click Pagination in the application toolbar.

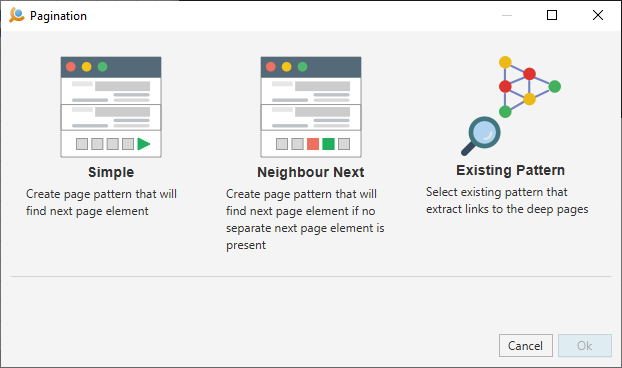

Pagination wizard dialog will appear:

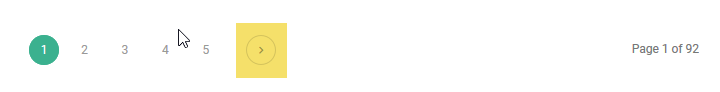

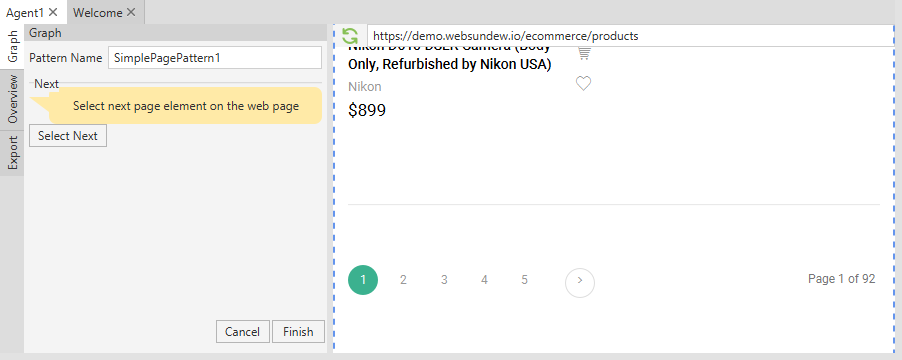

The web site uses simple pagination with separate next page element. So we can choose Simple page pattern type and click Ok. Next page pattern wizard will appear:

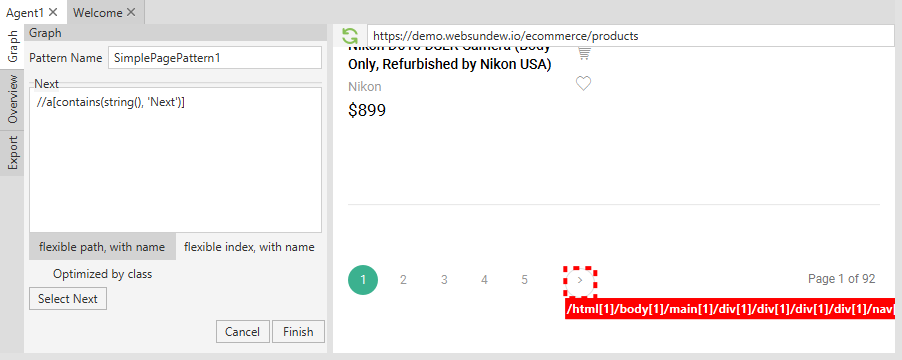

WebSundew uses page pattern to find HTML element that leads to next pages. Click on the next page element in the browser part of the agent, then click Select Next in the wizard.

Click Finish in the wizard part of the agent's editor. Next page pattern will be added to the project.

The Agent will use this pattern on all similar pages to find next page element.

Step 6 - Store Captured Data

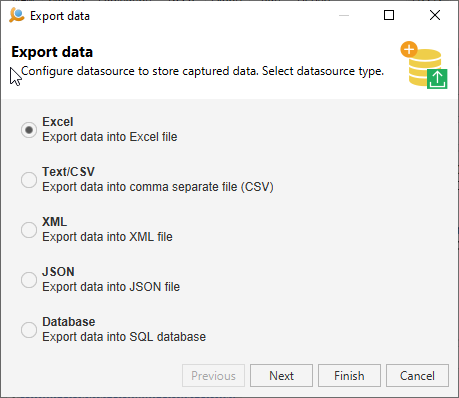

Now we are ready to store captured data. Click Export in the application toolbar.

Data export wizard will appear:

Select Excel, then click Next.

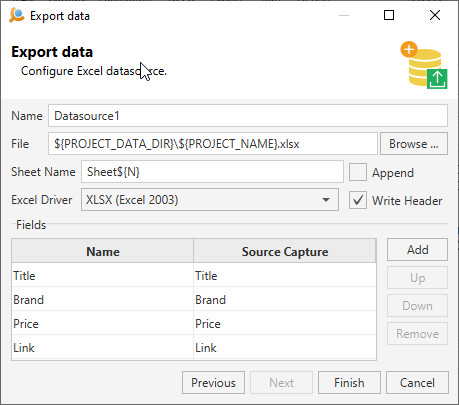

Configure Excel properties or leave default values. Click Finish. Data storage will be added to the Agent.

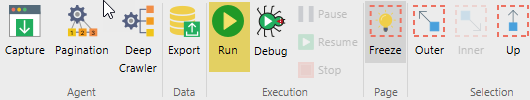

Step 7 - Run the Agent

Now we are ready to run the Agent. Click Run in the application toolbar.

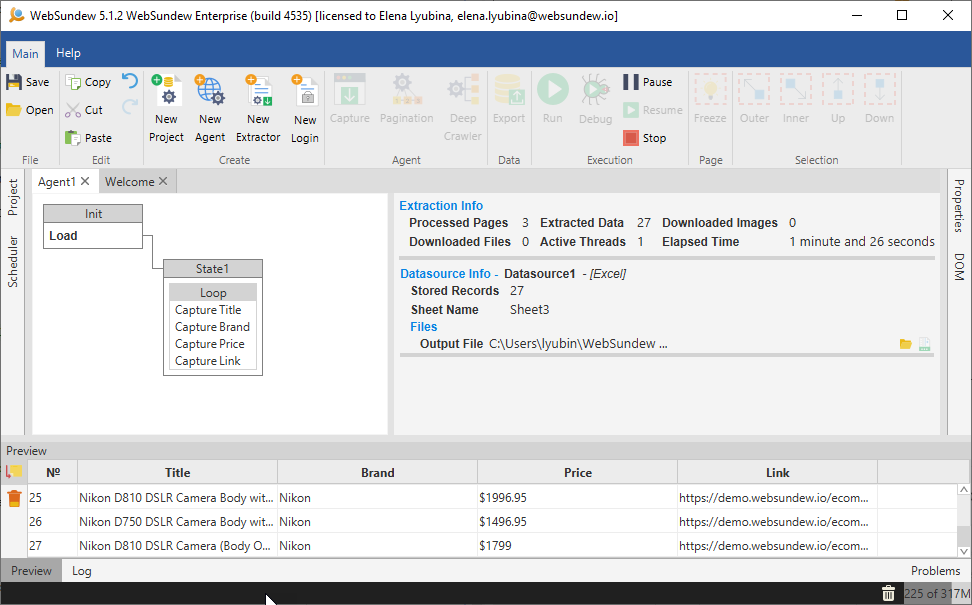

The Agent will start extracting the data.

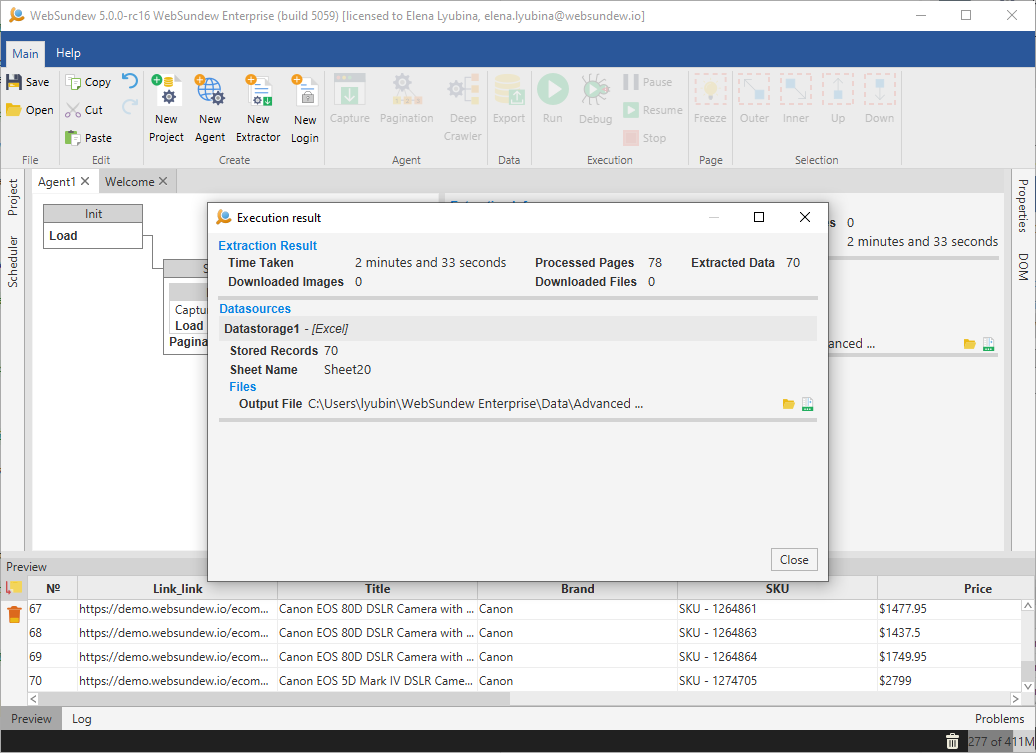

After completing the work, a dialog will appear.